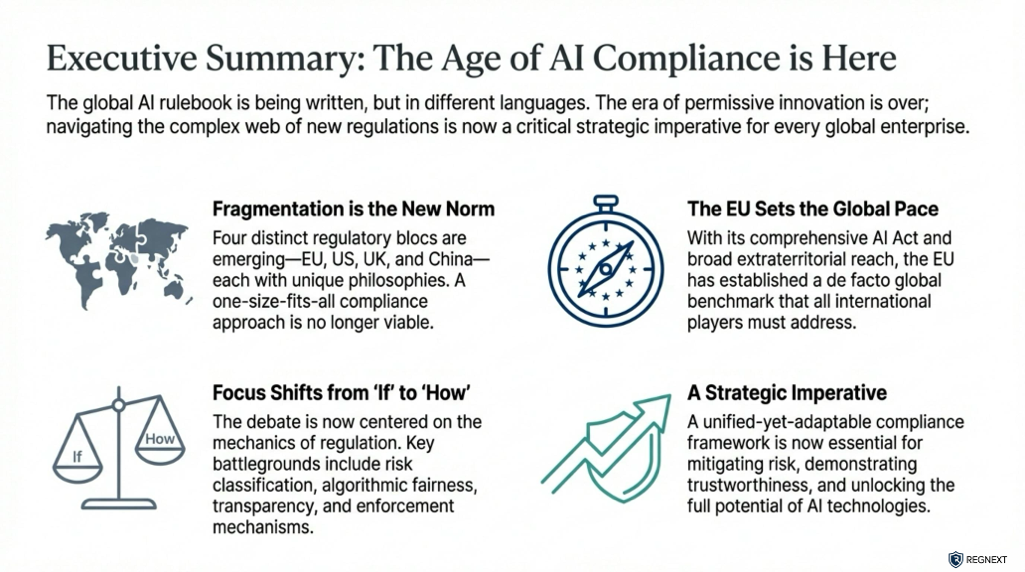

Executive Summary: The Global AI Regulatory Landscape

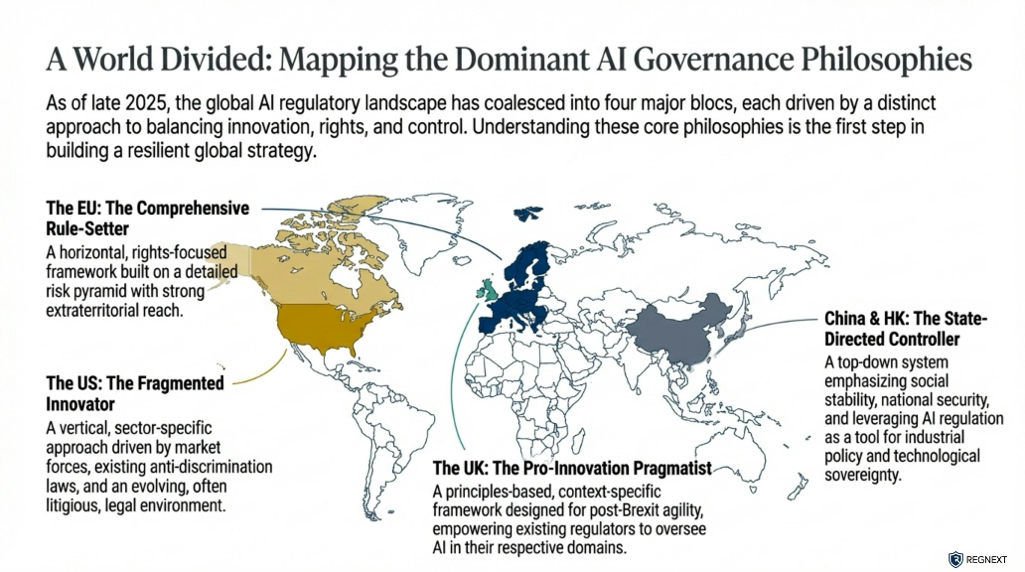

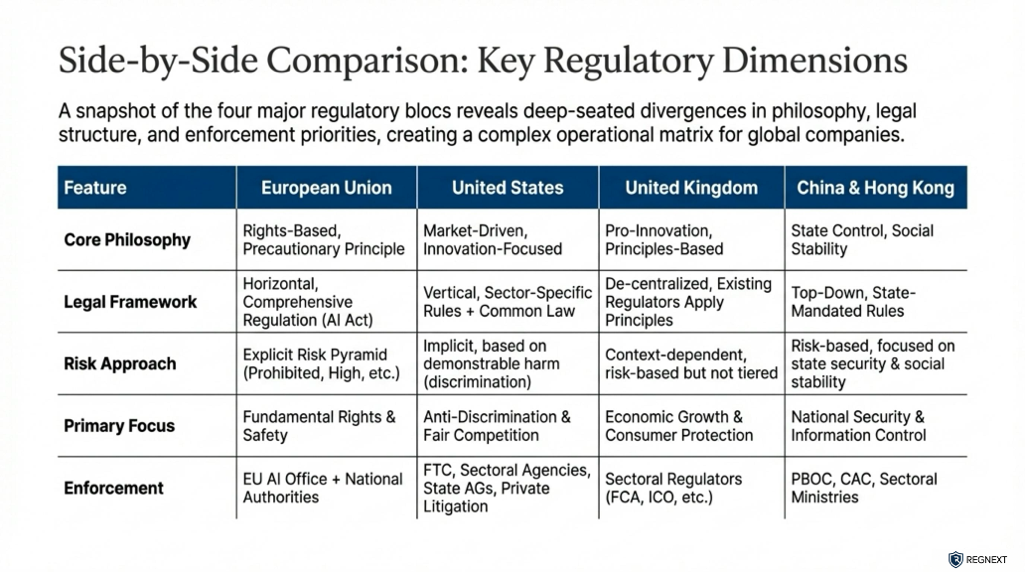

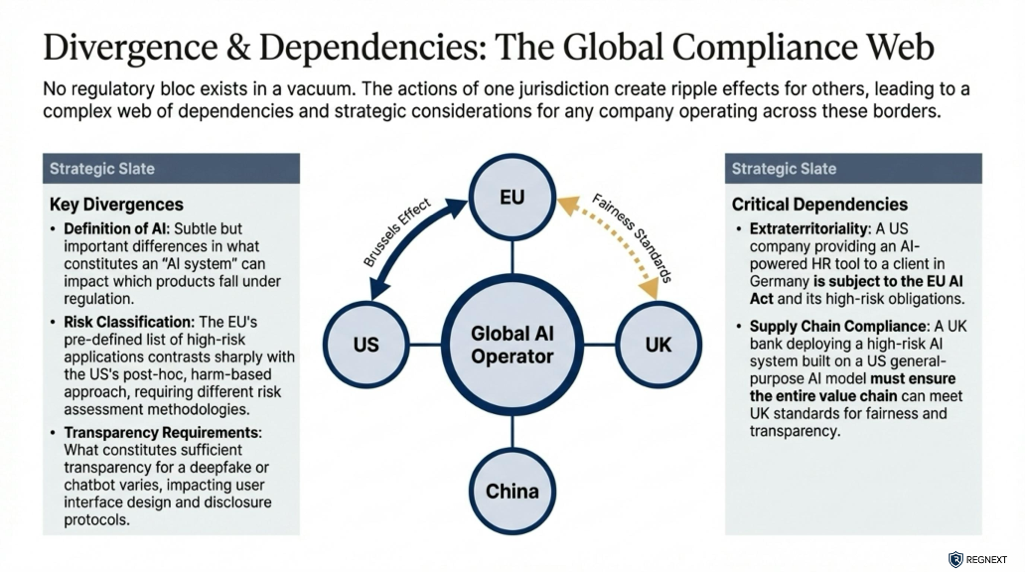

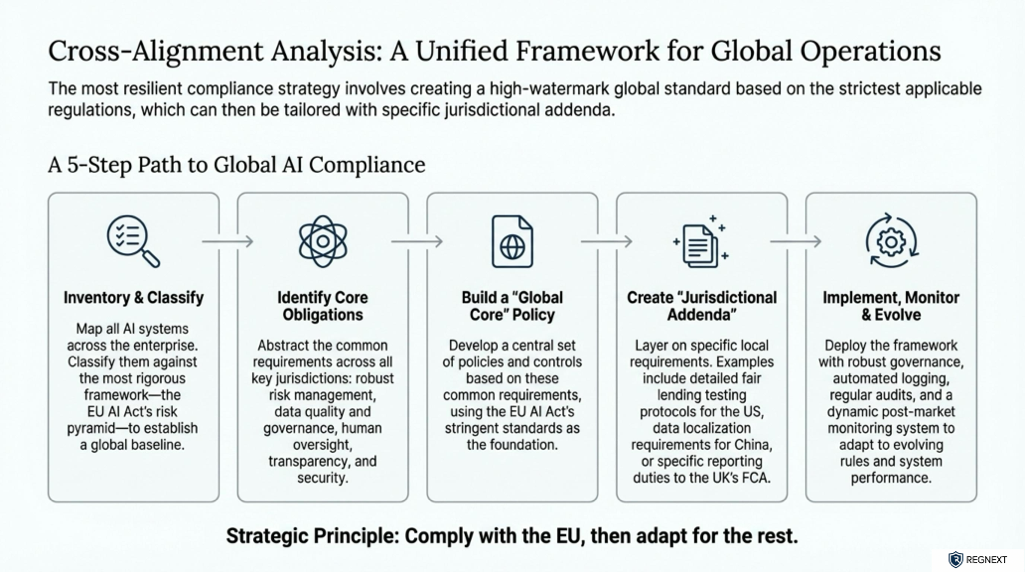

The global landscape for Artificial Intelligence (AI) governance is rapidly evolving as jurisdictions move from theoretical principles to concrete implementation and enforcement. While the shared goal across regions is to harness the benefits of AI while mitigating risks to safety, fundamental rights, and financial stability, the approaches differ significantly—ranging from comprehensive horizontal legislation in the European Union to sector-specific, pro-innovation frameworks in the United Kingdom and the United States.

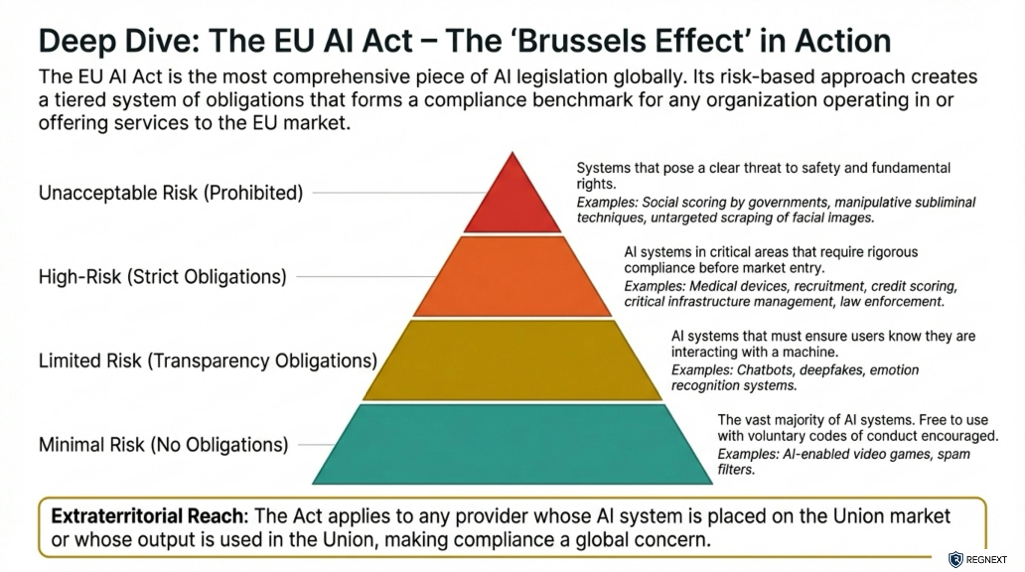

1. The European Union: Comprehensive, Risk-Based Legislation

The EU has established a pioneering legal framework through the AI Act, which lays down harmonized rules for the placing on the market and use of AI systems. This regulation adopts a risk-based approach, categorizing AI practices into different levels of risk:

- Prohibited Practices: AI practices deemed to pose unacceptable risks, such as those deploying subliminal techniques to distort behavior or social scoring by public authorities, are banned,,.

- High-Risk Systems: Strict obligations apply to high-risk AI systems, including requirements for data governance, technical documentation, transparency, human oversight, and accuracy,.

- General-Purpose AI (GPAI): The framework includes specific rules for GPAI models, particularly those with systemic risks, requiring model evaluation, adversarial testing, and cybersecurity protections,.

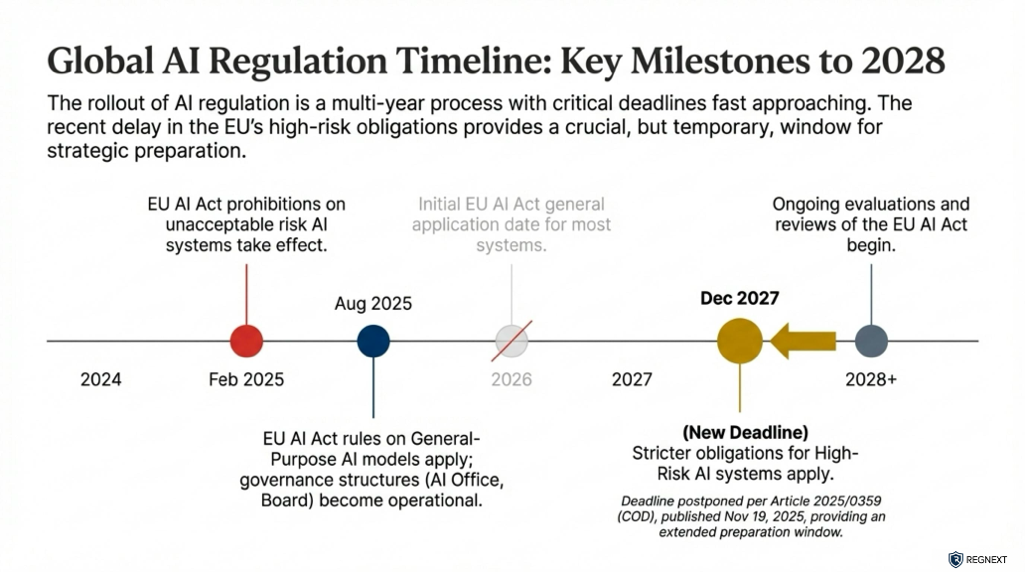

To address implementation challenges, the European Commission recently proposed a "Digital Omnibus" regulation. This proposal aims to simplify compliance by extending regulatory simplifications to small mid-caps (SMCs), centralizing oversight of GPAI models with the AI Office, and clarifying the interplay between the AI Act and other EU legislation,.

2. The United States: National Framework and Sectoral Guidance

The U.S. approach combines voluntary frameworks with executive action and sector-specific enforcement.

- National Policy Framework: In December 2025, the White House issued an Executive Order to establish a minimally burdensome national policy framework intended to preempt inconsistent State laws that threaten to stymie innovation,. This order directs the establishment of an AI Litigation Task Force to challenge State laws that unconstitutionally regulate interstate commerce or conflict with federal policy.

- Voluntary Frameworks: The National Institute of Standards and Technology (NIST) provides the AI Risk Management Framework (AI RMF), a voluntary guide to help organizations manage AI risks and incorporate trustworthiness considerations into design and development. NIST has also released a profile specifically for Generative AI.

- Sectoral Enforcement: Agencies like the EEOC and DOJ are actively warning against disability discrimination in hiring caused by AI tools, emphasizing that existing civil rights laws apply to automated systems,. In the financial sector, the OCC and other regulators focus on third-party risk management and ensuring AI does not violate fair lending laws,.

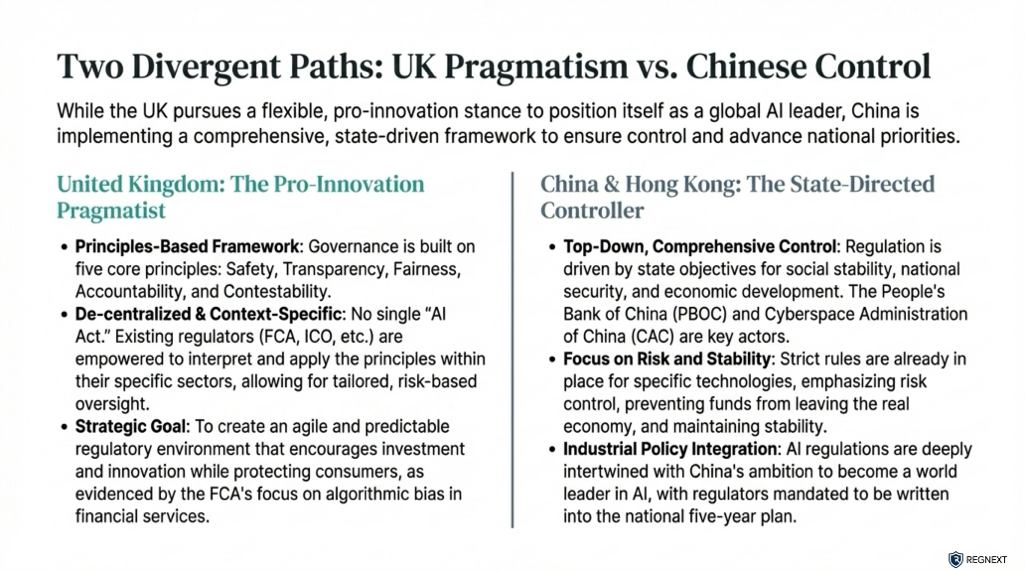

3. The United Kingdom: A Pro-Innovation, Sector-Led Approach

The UK has opted against a single new AI regulator, instead relying on existing regulators to apply principles within their specific domains to foster a "pro-innovation" environment.

- Financial Services: The Financial Conduct Authority (FCA) and Bank of England maintain a technology-agnostic, outcomes-focused approach. They are prioritizing the monitoring of financial stability risks, such as herding behavior in markets and dependencies on critical third-party AI providers. Initiatives include the AI Consortium for public-private engagement and AI Live Testing to help firms deploy AI safely,.

- Data Protection: The Information Commissioner’s Office (ICO) is developing a statutory code of practice to set clear expectations for responsible AI use, focusing on transparency and the protection of personal information in training generative AI models.

4. China and Hong Kong: Focused Guidance and Consumer Protection

- Hong Kong: Regulators like the Privacy Commissioner for Personal Data (PCPD) have issued the "Model Personal Data Protection Framework", providing recommendations on AI strategy, governance, and risk assessment to help organizations comply with the Personal Data (Privacy) Ordinance,.

- Banking Supervision: The Hong Kong Monetary Authority (HKMA) has issued guiding principles specifically for Generative AI in customer-facing applications, emphasizing the need for a "human-in-the-loop" approach during early deployment stages to ensure outputs are accurate and not misleading,.

- Mainland China: The landscape includes specific regulations such as measures for labelling AI-generated content and management of generative AI services.

Conclusion

While regulatory styles vary, common themes are emerging globally: a strong emphasis on transparency, the mitigation of bias and discrimination, and the management of third-party risks. As noted by the Bank of England and FCA, cross-border cooperation remains critical as AI technologies and the risks they pose do not respect national boundaries,. Organizations operating globally must navigate this patchwork by focusing on interoperable risk management strategies that address the core principles of safety, fairness, and accountability shared by these diverse jurisdictions.